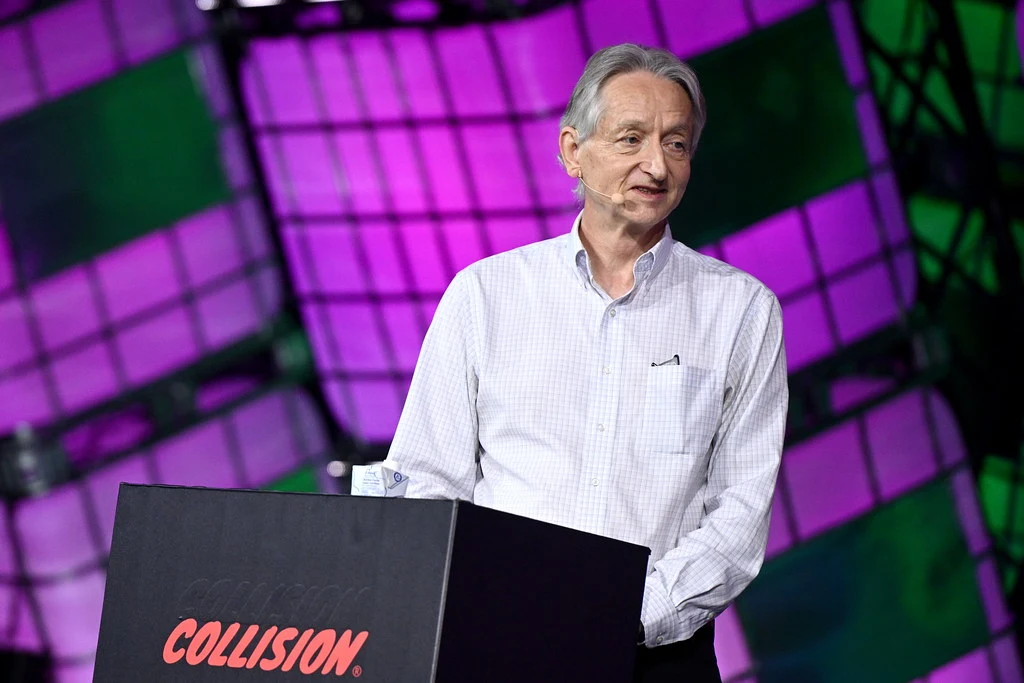

Geoffrey Hinton, a pioneer of AI and neural networks recently won the Nobel Prize in Physics solidifying his lasting impact on the field of artificial intelligence.

The brilliant mind who personifies the new era of artificial intelligence, Geoffrey Hinton, only achieved the Nobel Prize in Physics recently. This is more than an individual accomplishment; he joins the group of scientists whose research will be etched in history as long-lasting contributions to their respective fields.

Geoff Hinton Wins Nobel Prize in Physics for AI Innovations

He is often known as one of the “godfathers” of AI, for he essentially invented neural networks and the magic of machine learning.

Key points

1. Geoffrey Hinton’s Background:

Hinton has had a long and distinguished career in computer science. His research has helped shape the landscape of AI as we know it today.

At the Nobel Prize press conference, Hinton commented that he was “flabbergasted” to have been nominated as well as acknowledging the collaboration, more than influence, from his colleagues, Terry Sejnowski and the late David Rumelhart.

2. Firing of Sam Altman:

Hinton surprised everyone by revealing that one of his former students, Ilya Sutskever, was behind the dismissal of Sam Altman, the outgoing CEO of OpenAI, in November, which caused such an earthquake among tech people.

Hinton said that he is proud of his student but preferred to make the issue brief, as it was a sensitive and complicated issue.

3. AI’s Explosive Growth:

In 2012, with fellow scientists Sutskever and Alex Krizhevsky, Hinton developed a “breakthrough algorithm” called “AlexNet, ” which “massively improved AI’s ability to recognize images, and is often credited with launching the modern era of deep learning.”.

It has been regarded as a major landmark in AI, often likened to the “Big Bang” in the field, and has stimulated unprecedented AI research and applications.

4. Concerns About Safety in AI:

He was really very gravely concerned about the future of AI, He wants safety in AI, especially the claim that the machines would become more intelligent than humans within the next 20 years, and he emphasized the necessity for people to begin considering consequences.

Quite a few good researchers believe that sometime in the next 20 years AI will become more intelligent than us, so tackling problems early is just really important, he said.

5. Controversies Surrounding Sam Altman:

Sam Altman has become a controversial figure in the AI community. Critics, including former board members like Helen Toner, have accused him of neglecting AI safety concerns and prioritizing profit over ethical considerations.

This shift towards monetization has caused rifts within OpenAI, leading to an exodus of researchers who are concerned about the direction the company is taking.

6. Demand for Safety in AI:

He stated that he was urging the development of research in AI safety urgently because powerful AI systems are very unpredictable and pose a dangerous threat to humanity.

He said, “When we get things more intelligent than ourselves, no one knows whether we’re going to be able to control them.” This statement highlights the growing concerns by scientists about how unpredictable advanced AI systems actually are.”

7. Legislative Challenges:

More recently, the California legislature proposed an AI safety bill to govern the application of AI technologies. This bill was met with robust pushback from high-powered Silicon Valley investors and vetoed by Governor Gavin Newsom.

With rapid progress on AI without adequate regulation, the promise of gains is likely to be accompanied by an element of unforeseen events; thus, ethical oversight becomes all the more paramount.

FAQs

Q1: Who is Geoffrey Hinton?

Geoffrey Hinton is among the first generation of computer scientists. Recently, he won the Nobel Prize in Physics with work on neural networks and deep learning contributions to AI.

Q2: What has Hinton said about Sam Altman?

Hinton mentions that Ilya Sutskever was somehow involved in the whole process of Sam Altman being fired as the CEO of OpenAI.

Q3: Why was Sam Altman fired?

It became a chaotic decision by the board members regarding firing Sam Altman, the chief executive officer of Open AI. It resulted in public protest and internal conflict, and Sam Altman was reinstated after a few days.

Q4: What was AlexNet?

AlexNet is actually one of the top influential AI algorithms designed back in 2012, which drastically changed the face of image recognition technology. It is said to have developed deep learning, and it is also identified as one of the key creations of modern AI.

Q5: Why is Hinton so concerned about AI safety?

Hinton points out the need for AI safety because, with the advanced development of AI, unanticipated actions of AI systems may come across. He promotes the thought of researching so that there are no dangers of growing super AI technologies.

Q6: What happened to the AI safety bill in California?

An AI safety bill that was proposed to regulate AI technologies was vetoed by Governor Gavin Newsom citing very strong opposition from powerful Silicon Valley investors, who argued that such regulation is not needed.

Q7: What could be some dangers in super intelligent AI?

Advanced AI poses the risk of losing control over it, the emergence of ethical problems, and at a certain stage unknown behavior that could pose serious hazards to humanity. More research is required according to experts like Hinton in order to understand and mitigate these risks.

Conclusion

Geoffrey Hinton’s Nobel Prize win will shed light not only on his contributions towards artificial intelligence but also on issues that are of grave ethical concern to the development of AI. As the technology advances, so do the discussions about the shift in leadership for OpenAI and the safety requirements. Hinton’s call for more research into AI safety presents the demand for systems and machines to progress towards higher levels of intelligence while putting such in the service of human benefits instead of going ahead and labelling humans as second-class citizens. Bright prospects hang precariously over AI.